ReCrawler Plugin

ReCrawler is a small WordPress Plugin for quickly notifying search engines whenever their website content is created, updated, or deleted.

ReCrawler is a small WordPress Plugin for quickly notifying search engines whenever their website content is created, updated, or deleted.

Improve your rankings by taking control of the crawling and indexing process, so search engines know what to focus on!

Once installed, it detects pages/terms creation/update/deletion in WordPress and automatically submits the URLs in the background via ReCrawler, Google API, Bing API, and Yandex API protocols.

It ensures that search engines invariably have the latest updates about your site.

🤖 What is ReCrawler?

ReCrawler is an easy way for websites owners to instantly inform search engines about latest content changes on their website. In its simplest form, ReCrawler is a simple ping so that search engines know that a URL and its content has been added, updated, or deleted, allowing search engines to quickly reflect this change in their search results.

Without ReCrawler, it can take days to weeks for search engines to discover that the content has changed, as search engines don’t crawl every URL often. With ReCrawler, search engines know immediately the “URLs that have changed, helping them prioritize crawl for these URLs and thereby limiting organic crawling to discover new content.”

ReCrawler is offered under the terms of the Attribution-ShareAlike Creative Commons License and has support from Microsoft Bing, Yandex.

✅ Requirement for search engines

Search Engines adopting the ReCrawler protocol agree that submitted URLs will be automatically shared with all other participating Search Engines. To participate, search engines must have a noticeable presence in at least one market.

⛑️ Documentation and support

If you have some questions or suggestions, welcome to our GitHub repository.

💙 Love ReCrawler for WordPress?

If the plugin was useful, rate it with a 5 star rating and write a few nice words.

🏳️ Translations

- 🇺🇸 English (en_US) – Mikhail kobzarev

- 🇷🇺 Русский (ru_RU) – Mikhail kobzarev

- You could be next…

Can you help with plugin translation? Please feel free to contribute!

External services

This plugin uses external services, which are documented below with links to the service’s Privacy Policy. These services are integral to the functionality and features offered by the plugin. However, we acknowledge the importance of transparency regarding the use of such services.

- Yandex Webmaster – https://webmaster.yandex.ru

- Yandex IndexNow – https://yandex.com/indexnow

- Bing IndexNow – https://www.bing.com/indexnow

- Bing Webmaster – https://ssl.bing.com/webmaster/

- Google Indexing API – https://indexing.googleapis.com/

- Naver IndexNow – https://searchadvisor.naver.com/indexnow

- Seznam IndexNow – https://search.seznam.cz/indexnow

- IndexNow – https://api.indexnow.org

- Google Developers Console – https://console.developers.google.com/

- Yandex oauth – https://oauth.yandex.ru/

Installation

From your WordPress dashboard

- Visit ‘Plugins > Add New’

- Search for ‘ReCrawler’

- Activate ReCrawler from your Plugins page.

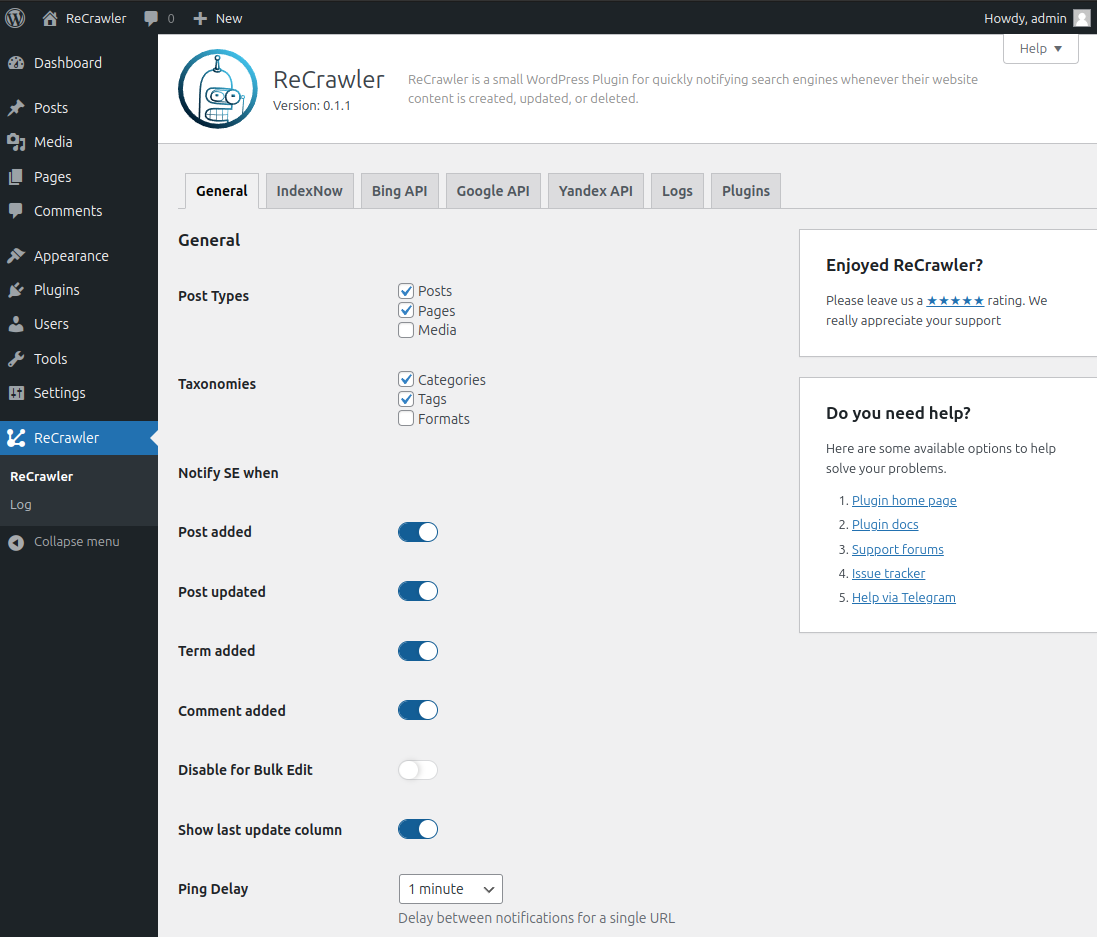

- [Optional] Configure plugin in ‘ReCrawler’.

From WordPress.org

- Download ReCrawler.

- Upload the ‘recrawler’ directory to your ‘/wp-content/plugins/’ directory, using your favorite method (ftp, sftp, scp, etc…)

- Activate ReCrawler from your Plugins page.

- [Optional] Configure plugin in ‘ReCrawler > Index Now’.

Screenshots

FAQ

Microsoft Bing – https://www.bing.com/indexnow?url=url-changed&key=your-key

Yandex – https://yandex.com/indexnow?url=url-changed&key=your-key

ReCrawler – https://api.indexnow.org/indexnow/?url=url-changed&key=your-key

Starting November 2021, ReCrawler-enabled search engines will share immediately all URLs submitted to all other ReCrawler-enabled search engines, so when you notify one, you will notify all search engines.

If search engines like your URL, search engines will attempt crawling it to get the latest content quickly based on their crawl scheduling logic and crawl quota for your site.

If search engines like your URLs and have enough crawl quota for your site, search engines will attempt crawling some or all these URLs.

Using ReCrawler ensures that search engines are aware of your website changes. Using ReCrawler does not guarantee that web pages will be crawled or indexed by search engines. It may take time for the change to reflect in search engines.

No, you should publish only URLs changing (added, updated, or deleted) since the time you start to use ReCrawler.

Yes, every crawl counts towards your crawl quota. By publishing them to ReCrawler, you notify search engines that you care about these URLs, search engines will generally prioritize crawling these URLs versus other URLs they know.

Search engines can choose not to crawl and index URLs if they do not meet their selection criterion.

Search Engines can choose not to select specific URL if it does not meet its selection criterion.

Yes, if you want search engines to discover content as soon as it’s changed then you should use ReCrawler. You will not have to wait many hours or worse weeks to see your changes on search engines.

Avoid submitting the same URL many times a day. If pages are edited often, then it is preferable to wait 10 minutes between edits before notifying search engines. If pages are updated constantly (examples: time in Waimea, Weather in Tokyo), it’s preferable to not use ReCrawler for every change.

Yes, you can submit dead links (http 404, http 410) pages to notify search engines about new dead links.

Yes, you can submit URLs newly redirecting (example 301 redirect, 302 redirect, html with meta refresh tag, etc.) to notify search engines that the content has changed.

Use ReCrawler to submit only URLs having changed (added, updated, or deleted) recently, including all URLs if all URLs have been changed recently. Use sitemaps to inform search engines about all your URLs. Search engines will visit sitemaps every few days.

Such HTTP 429 Too Many Requests response status code indicates you are sending too many requests in a given amount of time, slow down or retry later.

Search engines will attempt crawling the {key}.txt file only once to verify ownership when they received a new key. Also, you don’t need to modify your key often.

Yes, if your websites use different Content Management Systems, each Content Management System can use its own key; publish different key files at the root of the host.

No each host in your domain must have its own key. If your site has host-a.example.org and host-b.example.org, you need to have a key file for each host.

Yes, you can reuse the same key on two or more hosts, and two or more domains.

Yes, when sitemaps are an easy way for webmasters to inform search engines about all pages on their sites that are available for crawling, sitemaps are visited by Search Engines infrequently. With ReCrawler, webmasters ”don’t” have to wait for search engines to discover and crawl sitemaps but can directly notify search engines of new content.

See the documentation available from each search engine for more details about ReCrawler.

Changelog

0.1.3 (26.06.2024)

- Updated logo of the application

- Fixed tab switching bug

0.1.2 (15.05.2024)

- Added ability to sort by ReCrawler column in the list of Posts

- Added ability to migrate from IndexNow plugin in automatic mode

- Updated screenshots of the application

- Reduced the size of images in Google API documentation

0.1.1 (12.05.2024)

- Added WordPress 6.5+ support

0.1.0 (21.02.2024)

- Init plugin