Block AI Crawlers Plugin

Tell AI crawlers not to access your site to train their models.

Tells AI crawlers (such as OpenAI ChatGPT) not to use your website as training data for their Artificial Intelligence (AI) products. It does this by updating your site’s robots.txt to block common AI crawlers and scrapers. AI crawlers read a site’s robots.txt to check for a request not to index.

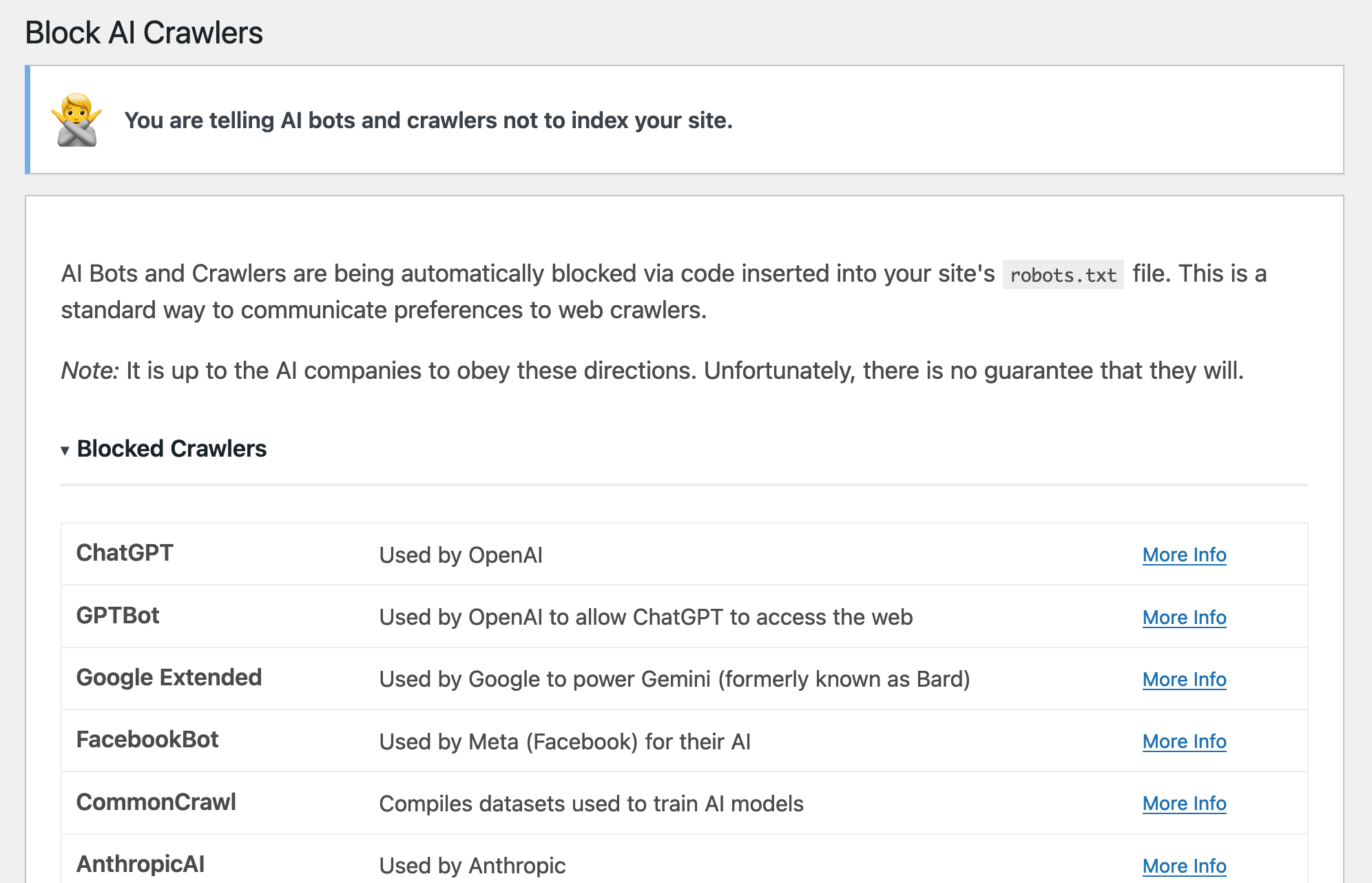

It blocks these AI crawlers and bots:

- ChatGPT and GPTBot – Crawlers and web browser used by OpenAI

- Google Extended – Crawler used for Google’s Gemini (formerly Google Bard) AI training

- FacebookBot – Crawler used for Facebook’s AI training

- CommonCrawl – Crawler that compiles datasets used to train AI models

- Anthropic AI / Claude – Crawler used by Anthropic

- Omgili – Crawler used by Omgili for AI training

- Bytespider – Crawler used by TikTok for AI training

- PerplexityBot – Used by Perplexity for its AI products

- Applebot – Used by Apple to train its AI products

- Cohere – Crawler used by Cohere AI training

- DiffBot – Crawler used by Diffbot for AI training

- Imagesift – Crawler used by used by Imagesift for images

Experimental Meta Tags

The plugin adds the “noai, noimageai” directive to your site’s meta tags. These tags tell AI bots not to use your content as part of their data sets. These are experimental and they have not been standardized.

Disclaimer

Note: While the plugin adds these markers, it is up to the crawlers themeselves to honor these requests.

Installation

- Activate the plugin through the ‘Plugins’ menu in WordPress

- Once installed the plugin is automatically activated. There are no user configured settings

- You can view more about what crawlers are being blocked at “Settings > Block AI Crawlers”

Screenshots

FAQ

Unfortunately, no. However, it does tell bots that your site shouldn’t be used for future datasets.

The plugin adds directives to the robots.txt file to tell AI crawlers that they shouldn’t index your site. It also adds the noai meta tag to your site’s header to do the same.

If you have a physical robots.txt file on your web server, you won’t be able to activate this plugin. The plugin only works when using WordPress’ built-in virtual robots.txt.

It should in theory. It just appends the directives to the robots.txt file.

No. Search engines follow differnt robots.txt rules.

Changelog

1.3.6

- New: Block Perplexity

- New: Block Apple AI

- Update: FAQ based on submitted question

1.3.5

- New: Block additional Omgili bot

- New: Block Imagesift

- Fix: Fix settings page

- Add:

blueprint.jsonfor plugin preview

1.3.3

- Fix: Issue with fatal errors on activation

1.3.1

- New: Blocks Anthropic’s Claude

- Fix: Missing external link icons

- Update: Bump tested to v6.5.3

1.3.0

- New: Adds settings page showing blocked crawlers

- Enhancement: Remove crawler description in

robots.txt

1.2.2

- Update: Adds deploy from GitHub

1.2.1

- Maintenance: Adds deploy from GitHub

1.2.0

- Block Cohere crawler

- Block DiffBot crawler

- Block Anthropic AI crawler

- Indicate compatibility w/WordPress 6.5.2

1.1.0

- Blocks additional crawlers.

1.0.0

Initial Release.